Research

Neuromorphic/In-Memory Computing

Our research focuses on memory-based deep learning techniques, including SRAM/ReRAM-based deep learning processing-in-memory (PIM) and Flash memory based robust deep learning inference techniques.

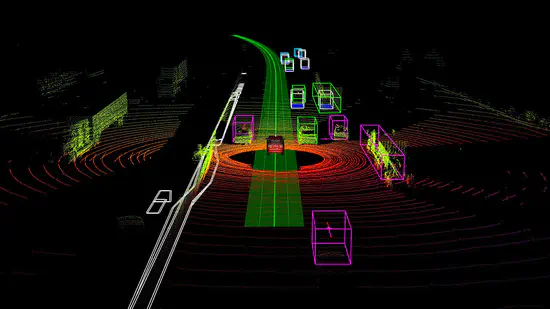

Machine Learning for 3D Data Processing

As three-dimensional (3D) vision data can provide abundant spatial information, it is being widely used in many areas, including autonomous driving and mobile robots. We aim to develop efficient and accurate 3D data processing techniques for classification, segmentation, pose estimation, etc.

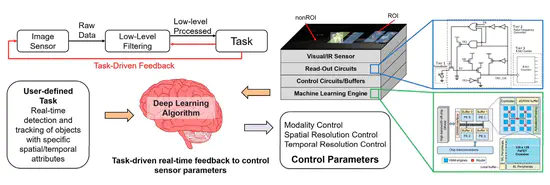

Sensor Systems with Integrated Deep Learning

To maximize the performance and efficiency of deep learning based data processing, we are currently exploring sensor platform design optimized for deep learning by leveraging the interactions between a sensor platform and a DNN.

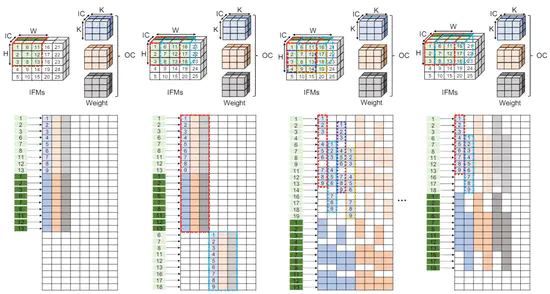

Deep Learning Model Compression and Acceleration

Deep neural networks (DNNs) are widely adopted at the IoT edge devices to enable more intelligence. However, the biggest challenge is their storage demand and computational complexity. As DNNs contain a large number of synaptic weights, the memory demand is a key challenge for application of DNNs, especially for memory-constrained platforms such as mobile systems.

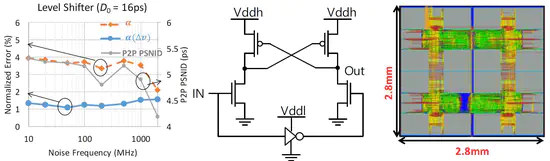

Digital Design Techniques

Tolerating timing error due to power supply noise (PSN) in digital circuits can be done with adding voltage margins. We designed guidelines to avoid overdesign due to PSN especially for the low-cost IoT devices.

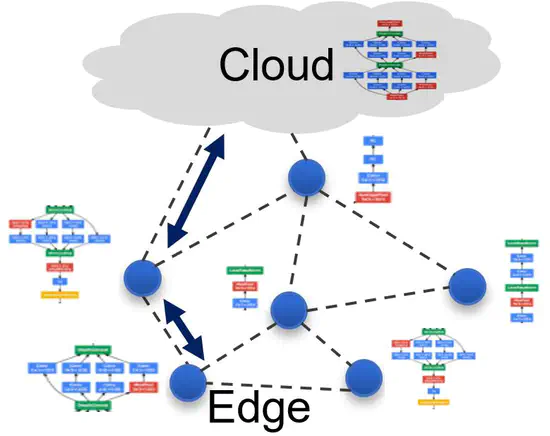

Edge-Host Collaborative Deep Learning

Based on the trade-off study between energy, accuracy, and throughput of an edge platform with an embedded DNN, we are currently exploring collaborative DNN inference between the edge and the host to optimally utilize the available bandwidth and energy.

Low-power Crypto Engines

The physical security is a key challenge for the resource-constrained edge platforms. A key challenge is to enable secure as well as ultra-low-power hardware. The research seeks to understand the interactions between low-power and security in edge devices, and explore innovations to enhance security at minimal power cost.

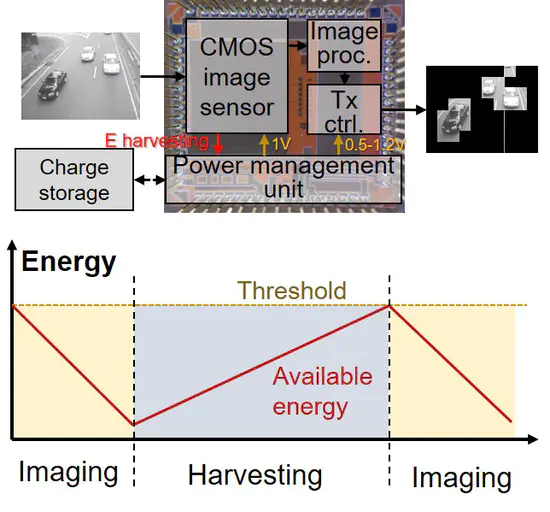

Low-Power Image Sensor System

The objective of this work is to design a self-powered, environment-adaptive sensor node that maintains a target Quality-of-Service (QoS) in a time-varying environment. A wireless image sensor node will be designed that incorporates a CMOS imager, digital signal processing unit, and RF transreceiver and is powered using energy harvested from the environment.

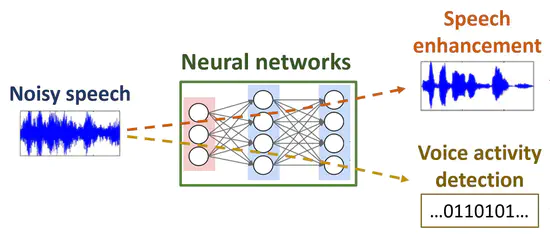

Machine Learning for Audio/Speech Processing

Our research focuses on front-end audio processing techniques for speech processing, such as voice activity detection (VAD), noise suppression, direction of arrival (DoA) estimation, and classification.

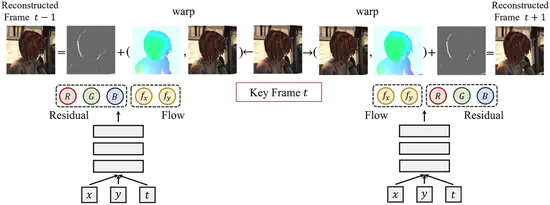

Machine Learning for Image/Video Processing

Machine learning and deep learning have made rapid progress in many computer vision applications over a short period. We focus on diverse techniques for image and video processing powered by machine/deep learning.

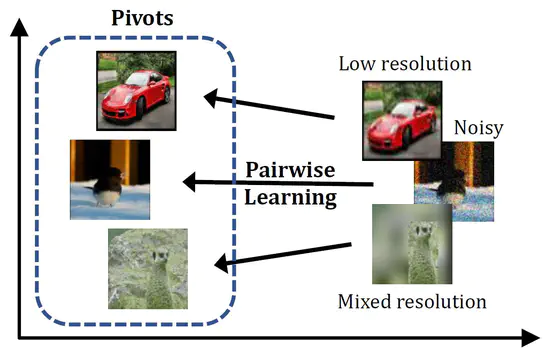

Variation-Robust Deep Learning

One of the challenges of deploying deep neural networks in sensor platforms is the variations in the input images; structural noise (adversarial images), inherent random noise (image perturbation), input scene variability, and weight value errors.

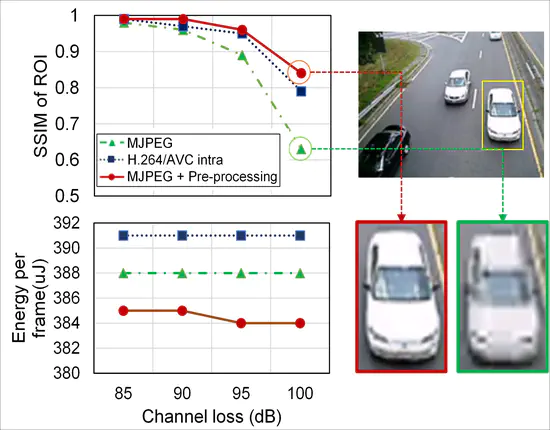

Low-Power Image/Video Processing

A critical goal in the image sensor node design is to deliver high-quality visual information under stringent energy and bandwidth constraints. This goal becomes more challenging under dynamic conditions such as environmental noise and variations in a wireless channel condition.