[C11] Design of an Energy-Efficient Accelerator for Training of Convolutional Neural Networks using Frequency-Domain Computation

Abstract

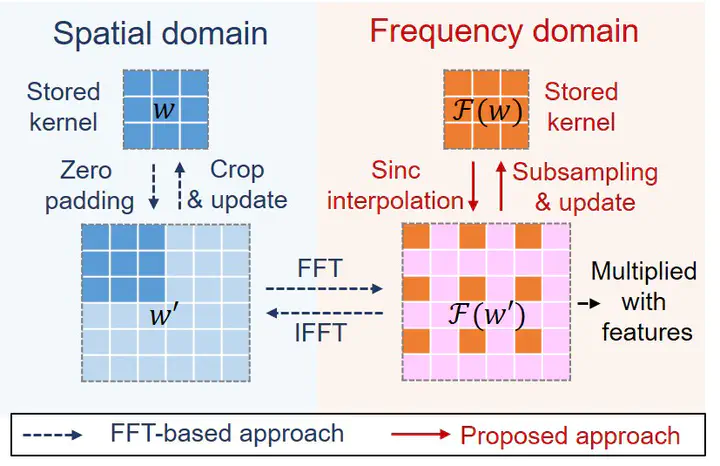

Convolutional neural networks (CNNs) require high computation and memory demand for training. This paper presents the design of a frequency-domain accelerator for energy-efficient CNN training. With Fourier representations of parameters, we replace convolutions with simpler pointwise multiplications. To eliminate the Fourier transforms at every layer, we train the network entirely in the frequency domain using approximate frequency-domain nonlinear operations. We further reduce computation and memory requirements using sinc interpolation and Hermitian symmetry. The accelerator is designed and synthesized in 28nm CMOS, as well as prototyped in an FPGA. The simulation results show that the proposed accelerator significantly reduces training time and energy for a target recognition accuracy.