[C34] NAS-VAD: Neural Architecture Search for Voice Activity Detection

Abstract

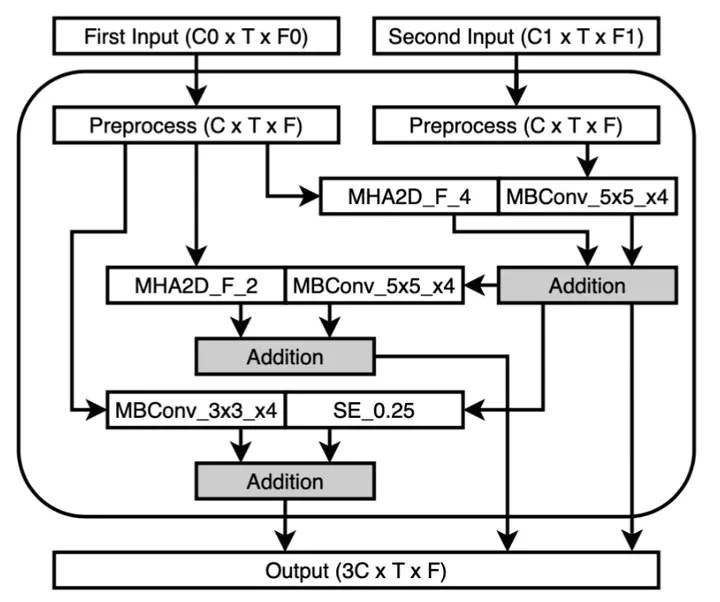

Various neural network-based approaches have been proposed for more robust and accurate voice activity detection (VAD). Manual design of such neural architectures is an error-prone and time-consuming process, which prompted the development of neural architecture search (NAS) that automatically design and optimize network architectures. While NAS has been successfully applied to improve performance in a variety of tasks, it has not yet been exploited in the VAD domain. In this paper, we present the first work that utilizes NAS approaches on the VAD task. To effectively search architectures for the VAD task, we propose a modified macro structure and a new search space with a much broader range of operations that includes attention operations. The results show that the network structures found by the propose NAS framework outperform previous manually designed state-of-the-art VAD models in various noise-added and real-world-recorded datasets. We also show that the architectures searched on a particular dataset achieve improved generalization performance on unseen audio datasets. Our code and models are available at https://github.com/daniel03c1/NAS_VAD.