[C9] Adaptive Weight Compression for Memory-Efficient Neural Networks

Abstract

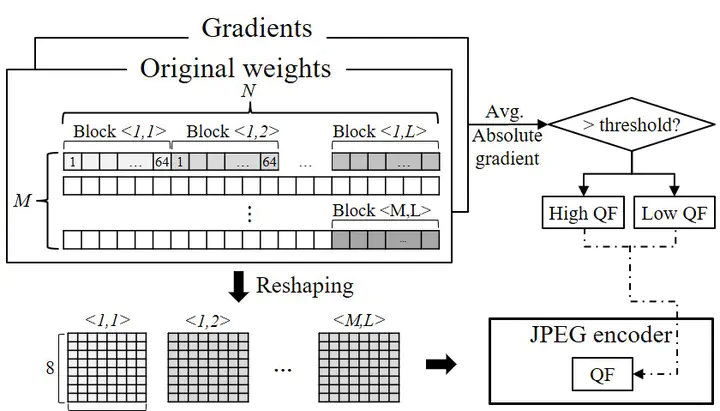

The neural networks require significant memory capacity/bandwidth to store/access a large number of synaptic weights. This paper presents the application of JPEG image encoding to compress the weights by exploiting the spatial locality and smoothness of the weight matrix. To minimize the loss of accuracy due to JPEG encoding, we propose to adaptively control the quantization factor of the JPEG algorithm depending on the error-sensitivity (gradient) of each weight. The blocks with higher sensitivity are compressed less for higher accuracy. The adaptive compression reduces memory requirement, which in turn results in higher performance and lower energy of neural network hardware. The simulation for inference hardware for multilayer perceptron with the MNIST dataset shows up to 42X compression with less than 1% loss of recognition accuracy, resulting in 3X higher effective memory bandwidth and ~19X lower system energy.