[J21] A Reconfigurable Neural Architecture for Edge–Cloud Collaborative Real-Time Object Detection

Abstract

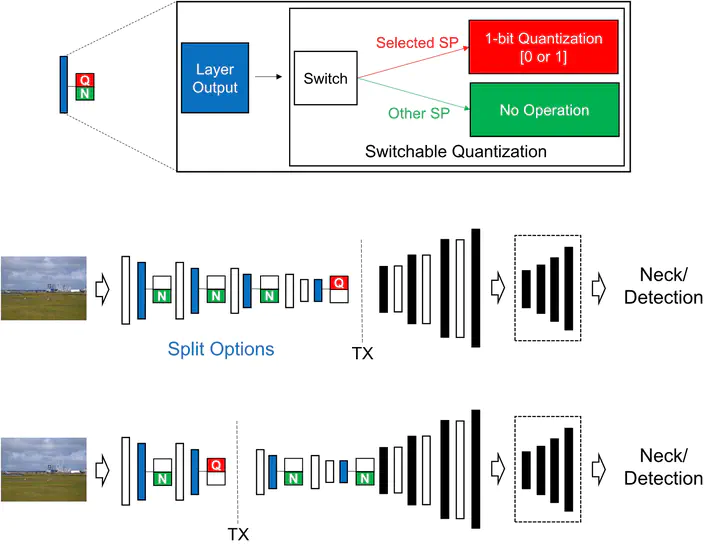

Although recent advances in deep neural networks (DNNs) have enabled remarkable performance on various computer vision tasks, it is challenging for edge devices to perform real-time inference of complex DNN models due to their stringent resource constraints. To enhance the inference throughput, recent studies have proposed collaborative intelligence (CI), which splits DNN computation into edge and cloud platforms, mostly for simple tasks, such as image classification. However, for general DNN-based object detectors with a branching architecture, CI is highly restricted because of a significant feature transmission overhead. To resolve this issue, in this study, we propose a reconfigurable DNN architecture for real-time object detection that can configure the optimal split point according to the edge–cloud CI environment. The proposed architecture allows the DNN model to be splittable by a feature reconstruction network and asymmetric scaling. Based on the splittable architecture, we integrate independent splittable models for each split point into a single-weight reconfigurable model that enables multipath inference by switchable quantization and distribution matching. Finally, we introduce an adaptive application procedure of the reconfigurable model for efficient CI, which includes asymmetric scale configuration and split point selection. The performance evaluation using YOLOv5 as the baseline showed that the proposed architecture achieved 30 frames/s (2.6× and 1.6× higher than edge-only and cloud-only inference, respectively), on the NVIDIA Jetson TX2 platform in a WiFi environment.