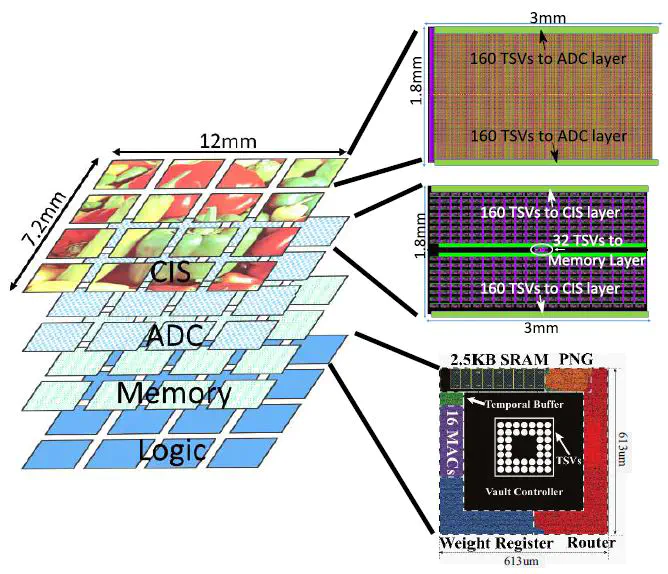

[J6] 3-D Stacked Image Sensor With Deep Neural Network Computation

Abstract

This paper investigates the power and performance trade-offs associated with integrating deep neural network (DNN) computation in an image sensor. The paper presents the design of Neurosensor-a CMOS image sensor with 3-D stacking of pixel array, read-out circuits, memory, and computing logic for DNN. The analysis shows integrating DNN reduces transmit latency (and energy), but at the expense of processing and memory access latency (and energy). Hence, given a specific DNN and transmission bandwidth, there exist an optimal number of layers that should be computed in the sensor to maximize energy-efficiency. In general, it is often more efficient to integrate memory within the sensor stack and/or implement only the feature extraction layers on the sensor, and optimized configurations can achieve up to 90× improvement in energy efficiency compared to the baseline. Further, coupled power, thermal, and noise simulation demonstrates that integrating DNN computation can increase pixel-array temperature resulting in higher noise, and hence, lower classification accuracy.